33 Publication Bias

The scientific method works by building on work and ideas generated by previous studies present in the published literature. You may be familiar with the term “Standing on the shoulders of giants” immortalised on the Google Scholar homepage. In this process, novel ideas attract a lot of attention and are then tested as working hypotheses by a number of other workers whose work will ultimately add up to a collective assessment of whether the initial idea was valid or not. This iterative process for moving from ideas to hypotheses to theories is in the foundation of science, and relies on the abilities of scientists in different areas to replicate previous work and test the same hypotheses under different conditions. In the biological sciences, conditions under which organisms live are so variable that exact replication of studies is difficult, but still necessary to build our understanding of the world around us.

But what if those initial ideas are wrong? Shouldn’t we quickly find out when they are replicated in different laboratories? Ideally, this would be the case. But one of the issues that stands in the way of the scientific validation process is publication bias. The phenomenon of publication bias refers to all levels of the way in which studies are presented in the literature:

- the way in which prominent literature prompts scientists to test ideas

- the interest of journals to publish new and positive results

- the culture of highly impacting journals to accept high effect sizes from low sample sizes

- the potential for negative results to go unsubmitted or rejected for publication, especially if the sample size is small

- the influence of prominent scientists over their peers

- the dissemination of science through self-promotion or prominence in traditional media

- the positive feedback loop that results for researchers who have their ideas promoted

- from their employers providing promotion

- from funders supplying increased funding

- to other scientists who are more likely to follow and cite them

Ultimately, the current reward system in science, particularly associated with publication bias leads to the natural selection of bad science, a phenomenon that has legacy effects across generations of scientists (Smaldino & McElreath, 2016). In recent decades, we have lived in a scientific culture that encourages publication bias with decreasing effect sizes inversely correlated with journal Impact Factor, and diminishing effect sizes that ripple through lower impact factor journals (Munafò, Matheson & Flint, 2007; Brembs, Button & Munafò, 2013; Smaldino & McElreath, 2016), while negative results disappear almost entirely (Fanelli, 2012). We know that given the statistical treatments involved in accepting or rejecting hypotheses that both Type I and Type II errors must occur. But our human preference for positive results (Trivers, 2011), leads us to poor publication and citation choices. This book advocates for a change in this culture, but before we can change we need to be aware of the problems that the current system promotes, and why they are problematic.

One of the earliest studies to use a meta-analyses looked across many different studies in ecology and evolution and found a consistent effect of publication year, such that effect sizes decreased over time. Jennions and Møller (2002) concluded that this was due to publication bias with journals less willing to publish insignificant results, especially for studies with small sample sizes. The power of meta-analyses to look at many different studies that cover a topic is now widely recognised such that most of the studies cited in this chapter are meta-analyses that review topics. For insights into how to use meta-analyses to check for publication bias in the biological sciences, see Jennions et al. (2013) and Nagakawa and Santos (2012). It is important to remember that while meta-analyses may be able to show different kinds of publication bias, it does not mean that individual studies within these reviews are wrong, just that the influence of publication bias means they need to be treated with caution.

The phenomenon of publication bias is often reported as a single entity, but it is now recognised that it is made up of four discrete parts (Small study size, the decline effect, confirmation bias and citation bias), each of which are covered below. Although these four types of publication bias are recognised, they often act in synergy and it can be hard to tease them apart in individual cases. All of these types of publication bias require published material, but there is also the file drawer effect where critical publications, especially those showing negative results, are absent because they were never submitted for publication.

33.1 Small Study

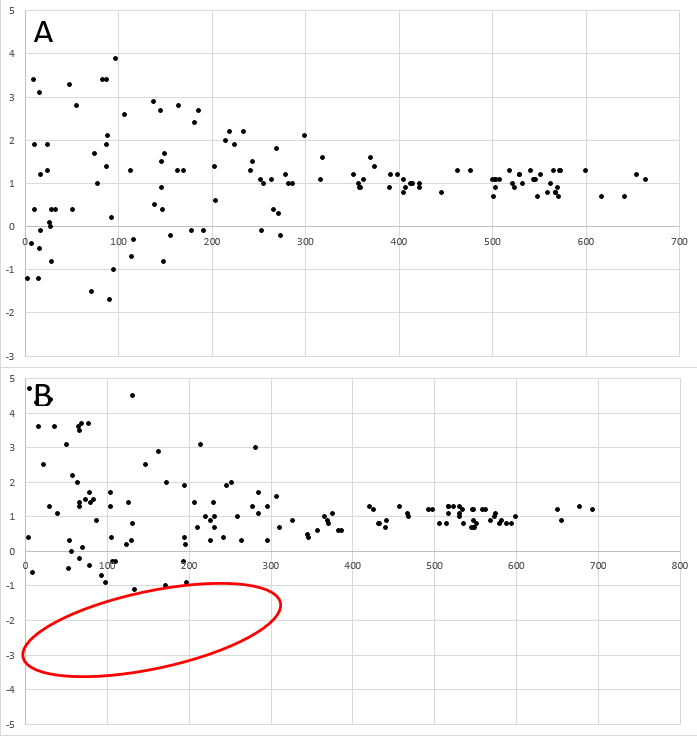

The small study effect suggests that non-significant studies with small sample sizes are under represented in the literature. This phenomenon can be represented graphically during a meta-analysis when the effect size and sample size of studies are plotted (Figure 33.1), they should represent a funnel shape (Sterne & Harbord, 2004).

FIGURE 33.1: A funnel plot showing the difference between expected changes in effect size for unbiased (A) and studies with small study size removed. In this plot with randomly generated data, the y-axis represents effect size while the x-axis shows sample size. Each point represents a published study. In B, we see that studies small studies non-significant results are underrepresented in the published literature (red oval), while they are present in the expected null set of studies (A).

Although the effect size declines in Figure 33.1A, it is not sloped. This simply shows the difference between an expected distribution of studies (Figure 33.1A), and when those with small sample sizes and non-significant results are not published (Figure 33.1B - cf file drawer effect).

The absence of publications of small studies with non-significant results is explained either (i) by the decisions of editors and reviewers, especially in journals with higher impact factors, not to accept studies that cannot replicate prior results without a boosted sample size, or (ii) the bias in what is expected by the researchers who decline to submit a small sample size for publication.

33.1.1 Blue tit plumage colour

Blue tits (Cyanistes caeruleus) are one of the most common songbirds in Europe. Their bright plumage has been studied extensively for evidence of its impact on sexual selection. Parker (2013) conducted a meta-analyses on published studies to determine the potential for publication bias relating to plumage colour and sexual selection in blue tits. Parker (2013) showed using funnel plots that there may be missing (unpublished) data for relationships between plumage colour and age and quality of blue tits. He also suggested that there was theory tenacity in the literature with researchers having likely committed Type I errors to obtain positive results, with ad hoc explanations of why non-confirmatory results occurred.

33.2 Decline effect

Decline effect is characterised by the phenomenon of decreasing effect sizes over time. The effect size in any type of study might not be exactly replicable with an increase in effect size heterogeneity, but over time similar studies should not present trends in effect sizes. We would only expect the effect size to significantly increase or decrease if there was a genuine reason. Examples of true changes in effect size might include the effect size on use of antibiotics given increasing antibiotic resistance, or growth rates of plants given an increase in atmospheric CO2. Other reasons for true changes in effect size may include changes in methodological approaches and equipment.

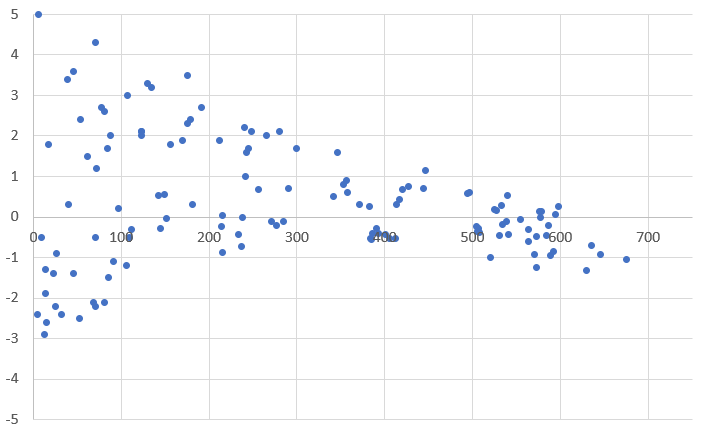

Often, studies with large effect sizes are characterized by low sample sizes, are often first published as ground breaking studies in high impact factor journals. They then gather disproportionate numbers of citations and consequently are highly influential in their field. If these studies are repeated with larger sample sizes, we would still expect them to be significant (Figure 33.1), but we would not expect that trend in significance to be anything but flat. If it showed a decline (Figure 33.2), then we would question whether there was any effect in any of the studies with smaller sample sizes.

FIGURE 33.2: A funnel plot showing that as sample size is increased effect size reduces and eventually becomes negative. In this plot with randomly generated data, the y-axis represents effect size while the x-axis shows sample size. Each point represents a published study.

33.2.1 The influence of parasites on their hosts’ behaviour

One of the first studies to question whether an accepted paradigm was correct by studying the cumulative change of effect size over time looked at the changes in host behaviour that parasites cause (Poulin, 2000). Poulin (2000) examined publications to determine whether the year in which they were published correlated negatively with the estimated relative influence of the parasite on the hosts’ behaviour. If no publication bias existed, then the year of publication should show no relationship with the effect size of the study. However, Poulin (2000) found a very strong negative relationship suggesting that there was a real decline effect in the effect size of studies over time.

33.2.2 House sparrow black bib size and dominance

The size of the small black patch on the throat of a house sparrow (Passer domesticus) has been suggested to signal conspecific dominance status. The hypothesis has been studied so much, that it became prominent as the text book example of patch ornamentation as a signal of status. Sánchez-Tójar and colleagues (2018b) conducted a meta-analysis on studies that had investigated this phenomenon. They found that the overall effect size was not significant, and therefore questioned the validity of this text book example.

33.2.3 The impacts of oceanic acidification on fish behaviour

Climate change is associated with an increase in the quantity of CO2 in the atmosphere, which in turn has increased the acidity of sea surface waters. Research into the impacts of pH on sea creatures became one of the fastest growing topics in marine biology in the 2000s. During this time, papers were published in high impact journals showing that low pH impacted fish behaviour (e.g., Munday et al., 2009). As is typical with the first movement of hypotheses, the first set of observations were published with large effect sizes on low sample sizes in high impact journals (see below). More and more studies were conducted following these ideas, but were unable to find such large effect sizes. Clements et al. (2022) published a meta-analysis on fish behaviour and ocean acidification showing that effect sizes decreased over time, often becoming indistinguishable from null findings. They questioned whether the initial studies had any value. Munday (2022), the author of some of the initial findings, responded that the reanalysis was flawed. Note that this is not the only controversy associated with his research see Part IV. It is also noteworthy that Clements et al. (2022) do not dismiss the possibility that there is an effect of oceanic acidification on fish behaviour, simply that it is not the large effect first shown in the initial research published, but that it might instead be negligible. They were also able to dismiss several of the potential variables associated with this behaviour: cold-water species, non-olfactory associated behaviours and non-larval life stages.

33.2.4 Sexual selection on male body size in Gambusia species

The impact of male body size on male-male competition and female choice in fish in the genus Gambusia has been studied extensively. Large males are thought to have an advantage in both sets of interactions, but researchers also found that small males are better at sneaky mating, which appears to occur at higher frequency. Taken together, these results suggest that there should not be selection for larger males in the genus Gambusia, despite the many reports to the contrary. Kim et al. (2021) conducted a meta-analysis on male body size across species in the genus Gambusia to test for selection on male body-size. These authors found some evidence for a diminishing effect of effect size with study sample size (see Figure 33.2).

33.3 Confirmation Bias

The failure to replicate previous findings are often thought to be uninteresting and may therefore not be selected for publication. This effect could be exasperated by disingenuous reviewers or editors who have a vested interest in seeing their own results confirmed, a type of Conflict of Interest.

33.3.1 Theory maturation

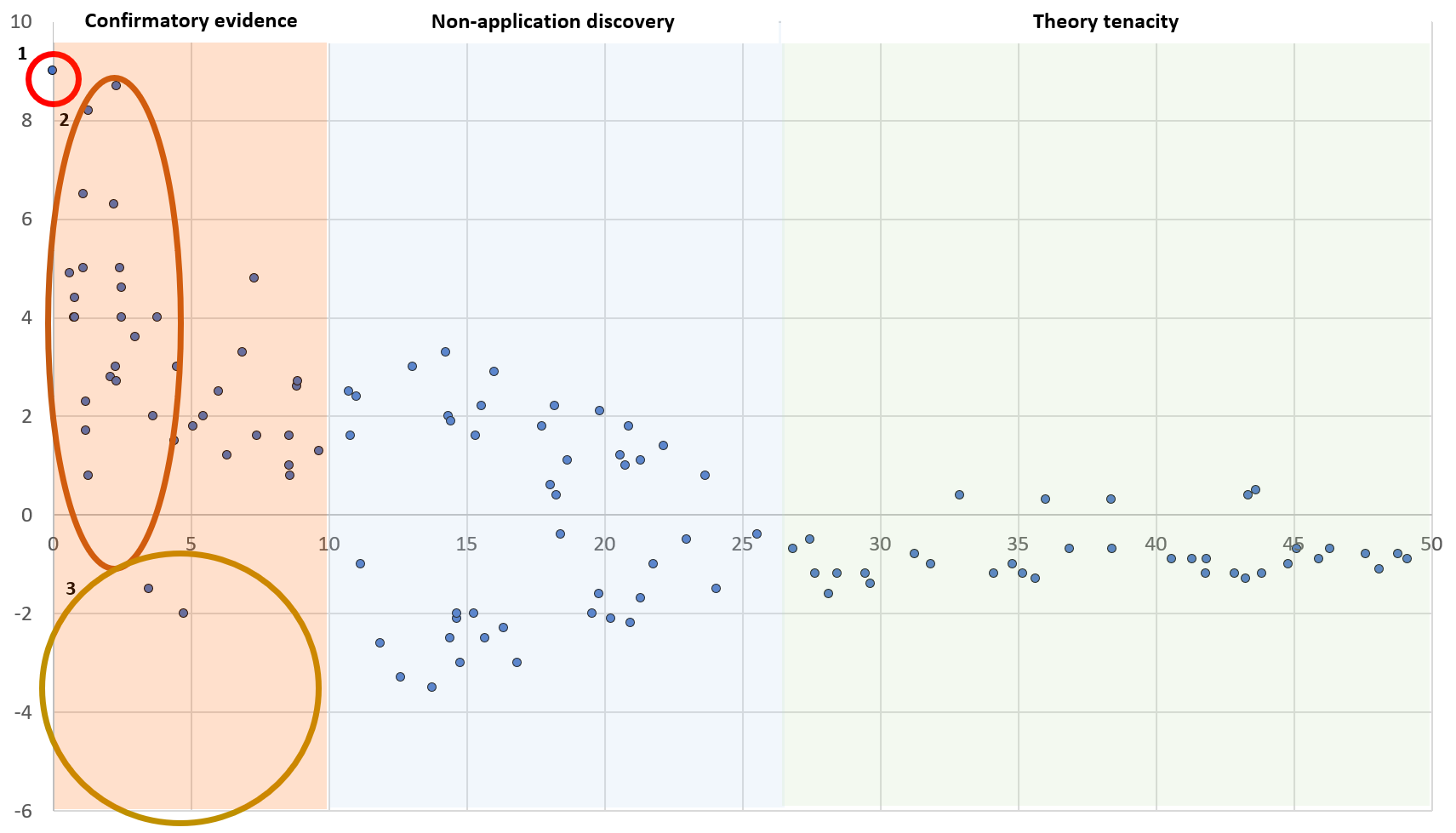

A particular aspect of confirmation bias is the concept of theory maturation (Figure 33.3). Awareness of the importance of theory tenacity, confirmation bias and data quality are important for biologists when considering which paper to cite (Barto & Rillig, 2012). Well cited papers that proposed new theories that were later disproved can continue to gather zombie citations. Theory maturation is considered to occur in three parts (Leimu & Koricheva, 2005):

FIGURE 33.3: A funnel plot demonstrating the three phases of theory maturation: confirmatory evidence, non-application discovery, and theory tenacity. In this plot, created with fictional data, the y-axis represents effect size while the x-axis shows time (in years) since an idea is first published (1). Each point represents a published study. In the first phase (confirmatory evidence), following the initial publication (1) we see a plethora of studies with large effect sizes (2) published in high impact journals that have no negative findings (3). In the second phase (non-application discovery), we see the first negative findings, but again studies with small effect sizes are missing, and there are still positive studies so that the theory has a great deal of debate. At the beginning of the third phase (theory tenacity), an important meta-analysis is published showing no significant overall effect. Publications thereafter attempt to replicate earlier results but fail to show any merit in the thory.

Confirmatory evidence: first studies are published (usually in high Impact Factor journals) with large effect sizes but mon small data sets that are highly influential. These studies could represent genuine examples of false-positive studies (Type I error). Next, confirmatory studies are published that confirm these new and trendy ideas, but studies that do not confirm them are either shelved or rejected (Loehle, 1987; Fanelli, 2010a).

Non-application discovery: Situations under which the theory does not hold are discovered (e.g. declining effect sizes)

Theory tenacity: the theory can live on despite the failure of subsequent studies to show significant effects (Fanelli, 2012).

This chapter has many examples of theory tenacity. Many of these have been written into textbooks are are likely still being taught in many classes. Whether or not these ideas are eventually debunked will rely, in part, on the diligence of teachers and textbook writers to update and re-write their texts over time.

Confirmation bias can occur along multiple places of the research cycle. For example, new and upcoming ideas are more likely to meet with the approval of funders and get funding. Similarly, the second or third manuscript supporting a newly published idea is more likely to be accepted by some (especially high impact) journals than the 30th or 31st. Because the research cycle time is rather long (write funding proposal, hire staff, conduct project, analyse, write-up and submit), studies that are able to shortcut part of the cycle (e.g. researchers with money from wealthy institutions) are likely to get ahead of this publishing curve on the confirmatory evidience part of the theory maturation scale, and have their studies published in highly impacting journals, albeit with reduced sample sizes comensurate with a quickly produced study.

33.3.2 The attractiveness of facial symmetry

An example of this kind of confirmation bias driven publishing effect toward bad science can be found in the literature of fluctuating asymmetry, and in particular those studies on human faces (Van Dongen, 2011). Back in the 1990s, there was a flurry of high profile articles purporting preference for symmetry (and against asymmetry) in human faces. The studies were (relatively) cheap and fast to conduct as the researchers had access to hundreds of students right on their doorsteps. The studies not only hit the top journals, but were very popular in the mainstream media as scientists were apparently able to predict which faces were the most attractive.

Stefan van Dongen (2011) hypothesised that if publication bias was leading to bad science in studies of fluctuating asymmetry in human faces, there would be a negative association between effect size and sample size. However, effect sizes can also be expected to be smaller in larger studies as these may come with less accurate measurements (Figure 33.1; see also Jennions & Møller (2002)). This negative association should not change depending on the stated focus of the study; i.e. if the result was the primary or secondary outcome. However, van Dongen (2011) found that in studies where the relationship between fluctuating asymmetry and attractiveness was a secondary outcome (not the primary focus of the study), the overall effect size did not differ from zero and no association between effect size and sample size was found. This was in contrast to the studies where the fluctuating asymmetry-attractiveness was a primary focus, suggesting that there was important publication bias in this area of research.

33.4 Citation Bias

Deciding what to cite comes with a plethora of decisions. Certainly, citing correctly is very important, and not to mis-cite (Measey, 2021). But in making your decision about what to cite you are probably influenced by a lot of different factors. By now, you are probably already aware of citation bias and how this can inflate the importance of certain publications beyond their actual worth. If not, then start by considering journal Impact Factor and consider what this actually means in terms of whether published papers have equal chances of being cited (sometimes referred to as Prestige Bias). Next consider the Matthew Effect, that well cited publications are likely to gather more citations. Next, think about the impacts of influential or elite researchers in your field. Barto and Rillig (2012) considered the different kinds of Dissemination Bias in citations of ecological studies. They found that the papers published first that had the strongest effect sizes and those published in high Impact Factor journals produced the most extreme effects - even though there was no correlation with quality of data.

Lastly, consider your own behaviour in promoting your own research to have it cited. Something as innocent as Tweeting your paper out to an academic audience has been shown to increase citations (Luc et al., 2021), causing a citation bias (sometimes referred to as the Showcase Effect) towards academics who are active on social media. Indeed, I promote the dissemination of your work by writing a press release, a popular article and improving your altmetrics. Each of these will likely improve the citation of your publication, in the same way that those who don’t do this will have less citations.

Sloppy citing is a phenomenon that you will doubtless become familiar with during the course of your career. Many citations are simply meeting the need to have a citation, instead of providing the evidence for the statement given. The literature is so vast (and doubleing every 12 years) such that it is hard to spot citation errors. Smith and Cumberledge (2020) studied 250 citations in high impact science journals (Science, Science Advances, PNAS, Nature Communications and Nature) to verify whether the propositions cited could be substantiated by the contents of the cited material. Even though the number of citations was low in this study, I can attest that it does represent a significant amount of work as I did a similar exercise to test the veracity of citations given by ChatGPT and Google’s Bard (see here). Their study found a citation error rate of 12.1%, and included 8.5% “unsubstantiated” and 3.6% “partially substantiated” (propositions with a minor error such as the quoted amount being inaccurate). They also included another 12.9% of citations as “impossible to substantiate” (either due to the lack of a proposition to substantiate, or that it was logically impossible to substantiate with a citation). This last, and largest, category contains citations that were not linked to propositions in the texts examined. For example, they may have been citing methodologies or further discussions. In my view, these are not mis-citations but a reality that a lot of papers cannot include unlimited text, and so need to refer readers on to other materials. My own view disagrees with Smith and Cumberledge (2020) when they assert that “there is no good reason to allow this type of inexact and non-verifiable referencing to pervade scientific literature”. I feel that there is no good reason to have our literature massively verbose when readers can simply ‘click-on’ to read the cited information. Clearly, this is an issue on which there will be differences of opinion.

I consider that many of the above citation errors and citation bias in general is caused by what I call the zombie citation effect where papers that have accumulated many citations over time are not actually referred to, but instead cited in a similar vein. This changes subtly over time such that they no longer (and never did) hold the information that many scientists think that they did (see Smith & Cumberledge, 2020). But the citations keep coming, because so many authors are not willing to check their sources thoroughly. Bucci (2019) made a similar point about retracted zombie papers that continue to draw citations. Presumably, this is a similar reason why AI bots also provide erroneous citations, because they are using existing citations as a basis for their own choices (Measey, 2021). I think of these citations as zombies as they will continue to receive incorrect citations over time, and this will not die because diligent scientists who do not cite them will not prevent the Matthew Effect.

33.4.1 The unexpected and unwelcome result effect

Some results are unexpected and unwelcome. They don’t chime with our prevailing world-view, and that means that we have a bias against publishing them. Sometimes, there is a community-wide dislike or distrust of a certain result, with many investigators feeling the same way about results that are unwelcome. This can result in a publication bias on several different levels. A good example of this is the story of species richness and habitat fragmentation and how this became confounded with patch size. The relationship between fragmentation and species richness has been regarded as intuitively negative. However, this intuitive result does not match the evidence. Sequential reviews have shown that when the total amount of habitat remains constant, there is no reduction in species richness with increasing fragmentation; instead, there is a weak but positive effect (Simberloff & Abele, 1982; Quinn & Harrison, 1988; Fahrig, 2003, 2017a).

Fahrig (2019) has come up with several ideas about why the scientific community perpetuates a dogma that runs against the evidence. For example, the very high citation rate that one of the original reviews (Fahrig, 2003) is made up of (at least) hundreds of mis-citations claiming the opposite effect (Fahrig, 2019). These perpetual mis-citations are likely a type of zombie citation effect where insufficient number of researchers are actually reading the paper they are citing. In addition to these mis-citations, there exists a clear publication bias in the literature (Fahrig, 2017b) which only seems attributable to the ‘unwelcome result effect’. Ultimately, it could be that ecologists, who write much of this literature, have so much prejudice towards large unbroken patches of conserved habitat that they cannot bring themselves to acknowledge the value of many fragments (Fahrig, 2019). This example of the ‘unexpected and unwelcome result effect’ in publication bias is fascinating, and I’m sure that it is not the only example. Read the entire story as told by Lenore Fahrig (2019).

33.4.2 The file drawer effect

Meta-analysis is only able to synthesise information from published data, and there will be an unknown and potentially unknowable amount of unpublished data that would be needed to provide a truly balanced view, the so-called file drawer effect: when studies that do not meet the expected results, or are initially rejected from journals with or without review, are placed into a filing drawer instead of being published (Csada, James & Espie, 1996; Fanelli, 2010b; Wood, 2020). Most biologists have examples of such studies, and a lot of student theses will fall into this category.

There is no easy solution to the file drawer effect as studies that fail to make the grade of peer review will likely be placed in a filing drawer and forgotten about. The current culture of many journals is also daunting to many researchers who get negative results - HARKing into a higher impact journal is more tempting. Preprints could form a valid place to provide an outlet for such manuscripts, as long as their data accompanies them.

33.4.3 Citation bias toward elite scientists

The idea that the ‘rich get richer’ is as much an economic reality today as it was for those who first described the Matthew effect over 2000 years ago. As papers begin to gather citations, so they are exposed to more readers who in turn are more likely to cite them further. Hence, we have seen (above) that there is good evidence that well cited papers are more likely to be cited again, even though the evidence that they contain maybe no better, or even worse, than equivalent papers that receive little or no citation attention.

Individual scientists are also a source for this kind of citation bias. In a study of 26 million scientific papers, Nielsen and Andersen (2021) found that the top 1% of scientists grossed between 14 and 21% of citations. These elite scientists have increased both the numbers of publications that they have authored, and also increased the fractional quantity (i.e. taking into account the number of authors on each paper) of citations that they gather per paper. This led Nielsen and Andersen (2021) to refer to these individuals as the ‘citation elite’. What we learn from their study is that the proportion of citations that the ‘citation elite’ get is growing over time. This despite the fact that the proportion of publications that the ‘citation elite’ are authoring has grown from 4 to 11%. The top 1% of scientists author more than 10% of all papers.

33.4.3.1 Gini coefficient for citation imbalance

A way in which this imbalance can be represented in a number is the Gini coefficient for citation imbalance, which is derived from economics: the Gini coefficient for income, wealth or consumption inequality.

A Gini coefficient of 0 represents perfect equality. In our case of citations, every paper published would receive an equal number of citations (total citations / total papers).

A Gini coefficient of 1 represents maximal inequality. For citations this would mean that one paper has all citations (total citations / 1 paper).

Low Gini coefficients (0.2 to 0.3) would suggest that some papers are disproportionately cited, and we might explain this by them having a broader appeal such as reviews and meta-analyses. Realistically, we cannot expect Gini coefficients to be 0, but low coefficients (0.2 to 0.3) are desirable as it suggests that much of what is published is valued. However, across the sciences, the figure is rising from 0.65 to 0.70 in the first 15 years of this century (Nielsen & Andersen, 2021). This constantly growing Gini coefficient for citation imbalance can be attributed to the ‘citation elite’. Instead of suggesting that all publications are valued, this very high Gini coefficient suggests that only a very small proportion of publications are valued. Moreover, this small number of publications is authored by an even smaller number of elite scientists.

33.4.3.2 Why do elite scientists garner more citations?

This is where you play your part. You may already be part of a group with an elite scientist in it. Or you may admire such a person from a distance. If so, you will be likely to admire their work and be positively biased towards citing what they author, even if they are only one of many authors. Perhaps you belong to a science social media site (see here), where you follow elite types and every paper they publish is provided to you in a feed. The more you are exposed to the work of these individuals, the more likely you are to cite it when you write. If there are particular individuals whose science you revere, and feel that your opinion is widely shared, then you may feel more secure in citing one of these influential elite scientists in your work. You may think that their work will be better accepted by your peers, including of course your reviewers. The more the elite are cited and become known by you and your peers, the more citations they will get.

33.4.3.3 Not every one of an elite’s papers will be a hit – the Pareto rule

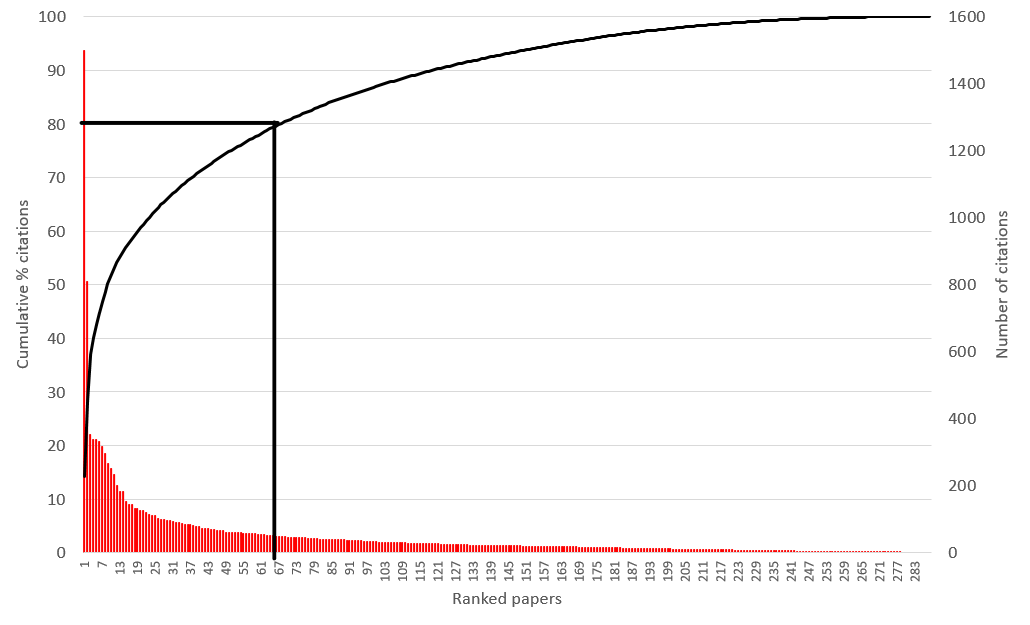

Elite scientists will publish many hundreds of papers, but not every one will receive a disproportionately high number of citations. A study of citations within the repertoire of top scientists suggests an 80–20 Pareto rule to their publications’ citations, where 20% of what they publish receives 80% of the citation attraction (Abramo, D’Angelo & Soldatenkova, 2016). The Pareto rule comes from business where it is claimed that 20% of a business’ affairs account for 80% of the effect.

You can collect this information very quickly for your own publications to see how you sit. Use a citation database, sort your publications by the number of times they have been cited and then plot this data as a cumulative percentage of your total citations (see Figure 33.4). When the curve hits 80%, how many of your publications are covered? A Pareto chart will typically also have a bar chart plotted on a second y-axis for each ranked papers’ citations, or their field citation ratio.

FIGURE 33.4: A Pareto chart for the citations for a fictional high-ranking scientist. Note how 80% of this author’s cumulative percentage of citations (horizontal line) intersects the x-axis at around 20% (vertical line) of the total publications for this scientist (N = 300). This suggests that this individual’s output conforms to the 80–20 Pareto rule for their publications’ citations. Also note how the highest cited paper has nearly 15% of all of this researcher’s total citations.

33.4.3.4 More prizes, funding and other benefits to the elite

The influence that these individuals receive goes beyond their peers and citations. Traditional media are also likely to follow and report upon their research. This will get them noticed by their own university’s administration and local, national and international politicians. Growing influence in these circles results in a positive effect on their careers. You can expect that these elite scientists are sitting in good (tenured) jobs, probably at prestigious universities, where they will also be getting some institutional bias toward their work. Your citations to their work will aid in their general welfare to get more prizes (Ma & Uzzi, 2018), funding (Aagaard, Kladakis & Nielsen, 2019) and other benefits that will accumulate in the same way that their citations grow.

Note that the networks in which these elite move are all important for their success. It is possible to predict who will get the prizes by studying the networks (Wagner et al., 2015; Ma & Uzzi, 2018).

This is not to say that prizes and good careers are not deserved by some of the scientific elite. Generally, these are people who work incredibly hard and do devote much of their time to advancing science and the careers of others. But it is clear that these people are receiving a disproportionate amount of prizes (Ma & Uzzi, 2018) and funding (Aagaard, Kladakis & Nielsen, 2019) to the detriment of other hard working scientists. There is also the problem that some of these elite behave badly, including bullying and fraud.

33.4.3.5 What can you do to prevent maintaining a citation elite?

Remember to read and cite critically. Do not be swayed by particular names, and what they publish, over the quality of the work that you are reading. Look for citing references and decide for yourself whether the evidence that is provided in a paper is worthy of citation. Remember that there are plenty of other factors involved in citation bias. It is up to you to be aware of these whenever you are writing and citing.

33.5 Natural selection of bad science

In 2016, Smaldino and McElreath proposed that ever increasing numbers of publications not only leads to bad science, but that we should expect to see this in an academic environment where publishing is considered a currency (Smaldino & McElreath, 2016). They argued that the most productive laboratories will be rewarded with more grant funding, larger numbers of students, and that these students will learn about the methods and benefits of prolific publications in high impact journals. When these ‘offspring’ of the prolific lab look for jobs, they are more likely to be successful as they have more publications themselves. An academic environment that rewards increasing numbers of publications eventually selects towards methodologies that produce the greatest number of publishable results. To show that this leads to a culture of ‘bad science’, Smaldino and McElreath (2016) conducted an analysis in trends over time of statistical power in behavioural science publications. Over time, better science should be shown by researchers increasing their statistical power as this will provide studies with lower error rates. However, increasing the statistical power of experiments takes more time and resources, resulting in fewer publications. Their results, from review papers in social and behavioural sciences, suggested that between 1960 and 2011 there had been no trend toward increasing statistical power. Biological systems, whether they be academics in a department or grass growing in experimental pots, will respond to the rewards generated in that system. When grant funding bodies and academic institutions reward publishing as a behaviour, it is inevitable that the behaviour of researchers inside that system will respond by increasing their publication output. Moreover, if those institutions maintain increasing numbers of researchers in temporary positions, those individuals are further incentivised to become more productive to justify their continued contracts, or the possibility of obtaining a (more permanent) position elsewhere. Eventually, this negative feedback, or gamification of publishing metrics, produces a dystopian and dysfunctional academic reality (Chapman et al., 2019; Helmer, Blumenthal & Paschen, 2020).

The story of how confirmation bias can adversely influence high flying researchers, leading to retractions and even the loss of their careers, is the subject of another chapter (see Part IV).